Future Pixel phones should have these AI-enabled features

Robert Triggs / Android Authority

Google’s Pixel phones weren’t the first to deploy AI smarts to push the envelope of smartphone capabilities, but they’ve consistently showcased some of the best examples of everyday enhancements that benefit day-to-day use. From better video capture using HDRNet to features like Photo Unblur and even audio message transcription, the Pixel series has pushed NPUs, and ML-infused cores on processors like the Tensor G2, to create subtle yet transformative user experiences.

Read more: Google Pixel 7 buyer’s guide

While the Google Pixel series continues to push the ambit of computational photography and ambient computing experiences, there are still a few more features we think could benefit from Google’s magic touch. Here are a few AI-enabled features we want to see on future Pixel smartphones.

Better Magic Eraser

Rita El Khoury / Android Authority

Last year, Google introduced the Magic Eraser tool alongside the Pixel 6 series to remove unwanted subjects and objects from photographs. It followed it up with the Face and Photo Unblur features that, well, unblur shaky photos using the power of the Tensor processor’s machine learning capabilities.

Google should follow the lead of alternative photo editors like Samsung’s Enhance-X to bring moire or shadow removal.

However, I’d like to see Google extend the feature even further. Following in the footsteps of Samsung’s Object Eraser tool, I’d like to see Google add the ability to remove or adjust shadows and reflections. While Samsung’s implementation is closer to the Content-Aware Fill in Photoshop — that is to say, less than perfect — I can see Google’s AI skills and enormous photo sets filling in the gap for better spatial awareness and a cleaner look.

Moreover, taking photos of screens and displays is a fairly common use case for smartphones. However, all too often, you’ll end up with a moire pattern on the screen caused by the interference between the screen and the Bayer filter on your smartphone’s camera sensor. This pattern can make text unintelligible or make tightly woven fabrics look odd. Similar to Samsung’s Enhance-X app, which lets you remove moire patterns, it would be great to see Google bake the editing tool into Google Photos or, better still, into the camera app itself.

Image restoration

Dhruv Bhutani / Android Authority

Google isn’t shy about pushing advanced photo editing tools into the Pixel experience. Traditionalists might claim that optional tools like Photo Unblur and Magic Eraser tamper with the original photograph, but the end result is a perfect, aesthetically pleasing image devoid of any distractions.

Building full-blown image restoration into Google Photos is a no brainer.

Bringing that same computational approach to image restoration is a no-brainer. In fact, Google talked about fixing blurry film photos at the Pixel 7 launch event. An easy extension of that feature would be the ability to correct white balance in old scans. Taking it a step further, Google’s AI tools could be extended to fix defects like grain and scratches or add color to a black-and-white image. The possibilities are endless.

We recently compared the Pixel’s built-in Photo Unblur feature and manual correction using Photoshop — and the results weren’t too far from each other. A few simple additions would be all it takes to make a Google Pixel phone the best photo studio in your pocket.

View synthesis

Ever wished that you’d changed the perspective slightly before capturing a shot? While perspective correction tools exist, they tend to distort the image. View synthesis, however, can fix that without any aberrations. The futuristic technique uses machine learning algorithms to generate alternative views of a scene based on inputs from multiple pictures.

View synthesis can take data points from multiple images and let you manipulate the photo in post.

Google’s engineers have been experimenting with view synthesis for a while now (and have implemented something similar in Google Photo’s Cinematic photo automated suggestions). In fact, it was under consideration for the ill-fated Google Clips camera but was dropped due to its high computational requirement. A future Tensor chipset, however, might have sufficient grunt to enable the feature on-device.

The computational technique could capitalize on the multiple frames captured when shooting HDR Plus images and let you subtly alter oddly skewed images without tearing or distortion.

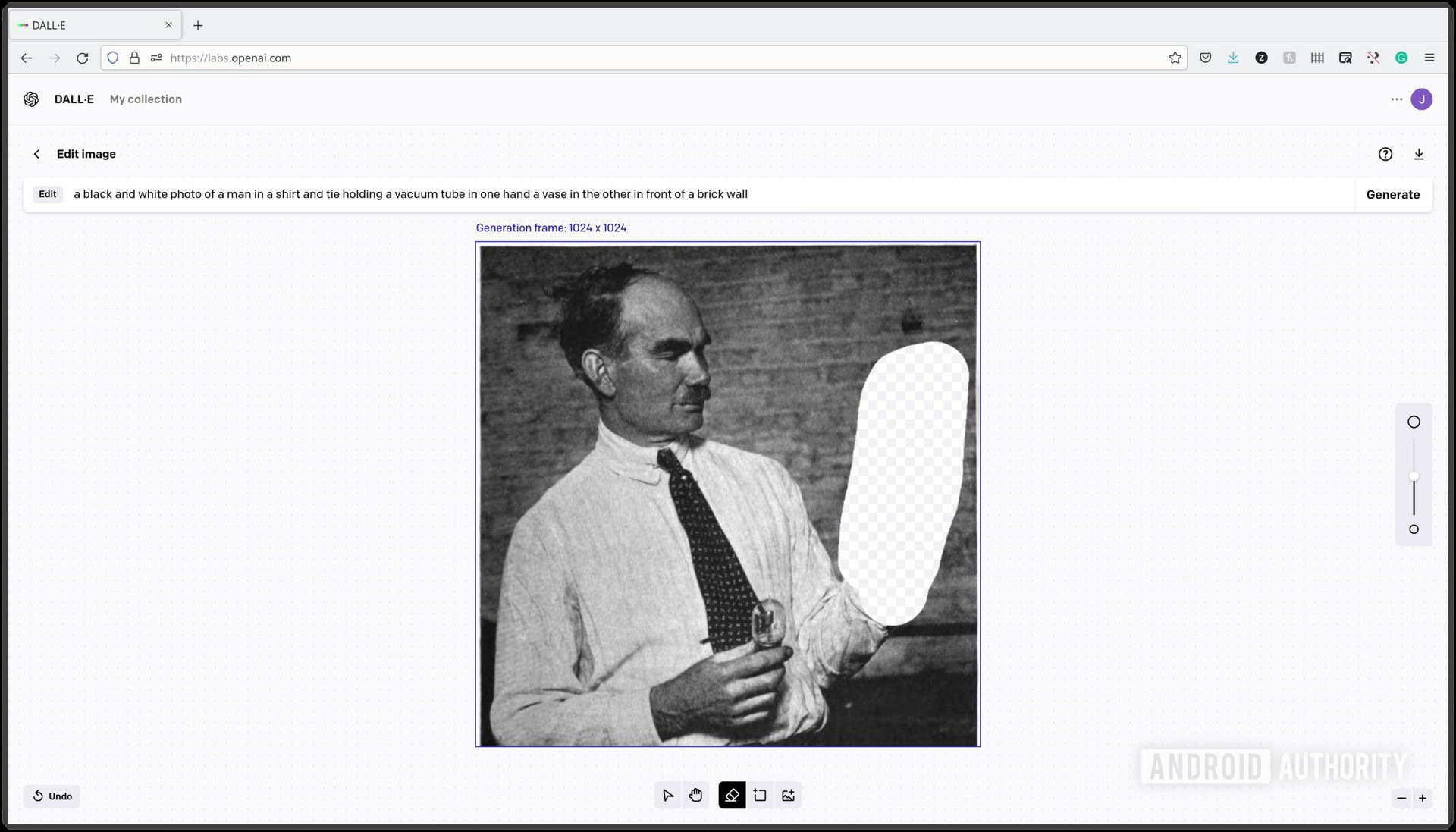

Built-in stable diffusion

Zak Khan / Android Authority

Cropped in a bit too close to the subject? Perhaps you’d like more of the backdrop for the perfect creative aesthetic. Photographers have long used Photoshop’s Context Aware Fill tool to extend the boundaries of a photograph. However, image generation algorithms like Stable Diffusion and DALL-E 2 make it easier and more accurate to pare back and get more of the frame.

On-device image generation algorithms would allow for more creative edits or better image expansion.

Building on-device AI algorithms to allow you to expand your image would be a fantastic tool, given the Pixel’s photography-centric ambitions. In fact, Snapseed, the Google-owned photo editor, already has an expansion tool built into it. However, that tool uses contextual cues to zoom out of the shot. On-device stable diffusion powered by ML cores on Pixel phones and paired with Google’s vast data sets can make the tool drastically better by allowing you to add more of the background back into the picture using artificial intelligence.

Full-resolution HDR shots

Dhruv Bhutani / Android Authority

With the new-fangled high-resolution sensors in modern Pixel phones, it’s not unreasonable to expect that users want full-resolution images with the same HDR Plus magic we get on the downsampled 12MP shots.

Look, pixel binning greatly benefits low-light photography and detail, but sometimes you just want the highest-resolution image possible. Brands like Realme have already talked about using the full-resolution output from the camera sensor to render the final results.

A full-resolution 50MP shot with all the same advantages as the Pixel’s standard 12MP photographs would allow users to crop in at will or blow up the image as large as they wish. For users who want maximum flexibility while editing their photos, it would be a great addition without any of the fuss associated with processing RAW images. Sure it might take a bit more time to render the shot, but having it as an optional setting should be a no-brainer, and I’d love to see it on the next generation of Pixel hardware.

Quality of life additions

Dhruv Bhutani / Android Authority

For all of Google’s focus on original and forward-looking features, it could stand to crib a few features from other Android OEMs and iPhones. To start with, I’d love to see Google copy the excellent screenshot pixelation feature from Oppo and OnePlus phones that can blur out key information like contact photos or phone numbers automatically.

The ability to pull out objects from a photo might seem like a gimmick but is a nifty way to create stickers or to add to documents.

Similarly, iOS 16’s ability to pull out objects from a photo can come across as a gimmick, but it can be a cool addition for users who want to make custom stickers or other creative use cases.

Related: 10 things iOS does better than Android

From spam filtering to better audio-based services, music recognition, and photography-related enhancements, the Pixel series has debuted many features that have benefited from artificial intelligence and machine learning. What other features would you want to see on upcoming Pixel phones? Let us know below.